CIS ToF Camera Sensor – Now Available for Purchase Online

CIS ToF camera sensor DCC-RGBD1, ROS package released in 2019, is now available to buy on the internet.

When you buy the camera sensor at Amazon.co.jp , it only can be shipped to Japan. But you can also buy the camera sensor outside Japan by contacting CIS Corporation sales e-mail address ec-sales@ciscorp.co.jp .

TORK released a new ROS driver package for CIS ToF camera sensor

We released a new ROS package “cis_camera” (https://github.com/tork-a/cis_camera).

This ROS package is a driver package for CIS Corporation ( https://www.ciscorp.co.jp/ ) ToF (Time of Flight) camera sensor DCF-RGBD1.

DCC-RGBD1 is a small ToF camera sensor (development kit) that can acquire a wide range of depth images.

- High accuracy depth images can be acquired in the range of 15cm to 5m.

- Small size H: 50mm × W: 55mm × D: 35mm (excluding protrusions)

- Simultaneous acquisition of RGB (QVGA) and Depth / IR (VGA) images

- Interface : USB 3.0 (USB 3.0 micro B connector installed: USB power supply is not supported)

- For indoor use

In this package, in addition to the ROS driver for the CIS ToF camera sensor, a sample program of point cloud processing for noise removal, plane detection / removal, an object point cloud extraction and calculating the object frame position. And a launch file for 3D drawing of these processing results in RViz is included.

Please refer to a documentation in GitHub for usage.

If you have problems with this package, please report them on GitHub Issues.

- GitHub Site ( Including a Quick Start guide )

- GitHub Document

- GitHub Document ( PDF )

- GitHub Issues

For inquiries to obtain CIS ToF camera sensor hardware, etc., please contact the following:

For hardware inquiries: Sales Representative, CIS Corporation

Email address: newbiz@ciscorp.co.jp

Phone number: +81-(0)42-664-5568

Translation of ROS-I training material

What is ROS-Industrial?

When examining the combination of robot arm such as industrial use and ROS, “ROS-Industrial (ROS-I)” is found. Does it support my robot or my application? What is ROS-I, is it different from ROS? We have received many such inquiries at TORK.

ROS-I is the name of an international consortium . In this consortium, the participating member companies share technical problems in using ROS for industrial use, and companies in the member provide packages and solutions (based on the contract). ROS-I standards and packages are not independent of ROS. After all, it is a story in the ROS ecosystem.

Packages developed and managed by ROS-I will be shared only within member companies for a while, but in the end it will be open source under the contract. The ROS-I repository is the result of these.

ROS-I regularly holds training courses to use this result in North America, EU, and Asia in Singapore. It is about 3 days course. This training course can be taken even if you are not a member of ROS-I.

Furthermore, the training materials for the training are also made public, and anyone interested if referring to this material can study the main packages of ROS-I.

In addition to the basic commentary and setup of ROS, it is also possible to write a node in C++, MoveIt!, a recently developed orthogonal coordinate planning package “Descartes” in ROS-I, and point cloud processing, image processing, and so on. The exercise is mainly the style of experimenting with your PC or VM.

To be honest, I think that the difficulty is rather high and not for beginners. But If you’ve already learned ROS and going to use the industrial robot from now on, it seems to be a very helpful reference.

TORK translated the training material into Japanese!

“But it is in English…” There is good news for you! We translated the material of this training course into Japanese, and was completed at this time!

ROS Industrial training material in Japanese

It would be fun for a challenge for winter vacation.

As a precaution, when there is a problem, mistake, improvement point in the content of the Japanese version , please contact TORK rather than the original authers.

Have a wonderful year!

TORK is participating in B-Boost Conference

TORK is invited to the open source software conference “B-Boost” held in Bordeaux, France on 6 and 7 November . We will talk about robots and ROS.

We will report the situation. Je suis parti.

World MoveIt! Day 2018 in Kashiwa-no-ha was held

World MoveIt! Day 2018 in Kashiwa-no-ha was held last Friday! I will introduce the state of the day by photograph. I hope to share the atmosphere with who could not participate.

State before opening

Opening

Finally the World MoveIt! Day 2018 in Kashiwa-no-ha started! There was explanation about an example of challenge at the hackathon.

Next, there was an introduction of the robot from the company that exhibited the robot at the venue.

Hackathon (morning) starts!

Everyone is working muzzle. There were some people who tried the program on actual machines.

Lunch

Good work every morning! It is lunch time.

Presentation by participants

Presentation by participants began while eating lunch.

An introduction of WRS2018 product assembly challenge participation report was given by Omron Sinic X Co., Ltd.

He introduced about the new function of MoveIt! Task Constructor. This seems to enable parallel tasks such as moving while grabbing things that we could not do currently.

The practical example of SEED-noid given by Seed Solutions, through the story of demonstration experiment at a competition set in a convenience store and a restaurant.

It is an introduction of useful tools when you want to know the angle of each joint when moving the robot with MoveIt!

Hackathon (afternoon) starts!

When the presentation is over, Hackathon is resuming.

Achievement presentation

Thank you for your hard work! Hackathon is over. Time to present we worked on today all day.

There are a lot of efforts to be helpful at the next participation!

Thank you very much for all the participants! Let’s meet next year!

TORK received “RT Middleware Promotion Contribution Award”

We received “RT Middleware Promotion Contribution Award” from the Japan Robot Industry Association. It is a cooperative award with Kawada Robotics. NEXTAGE Open’s software support was appreciated. We will continue to contribute to open source software in the robot field.

ROS Workshop for Beginners on 12th September 2018

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

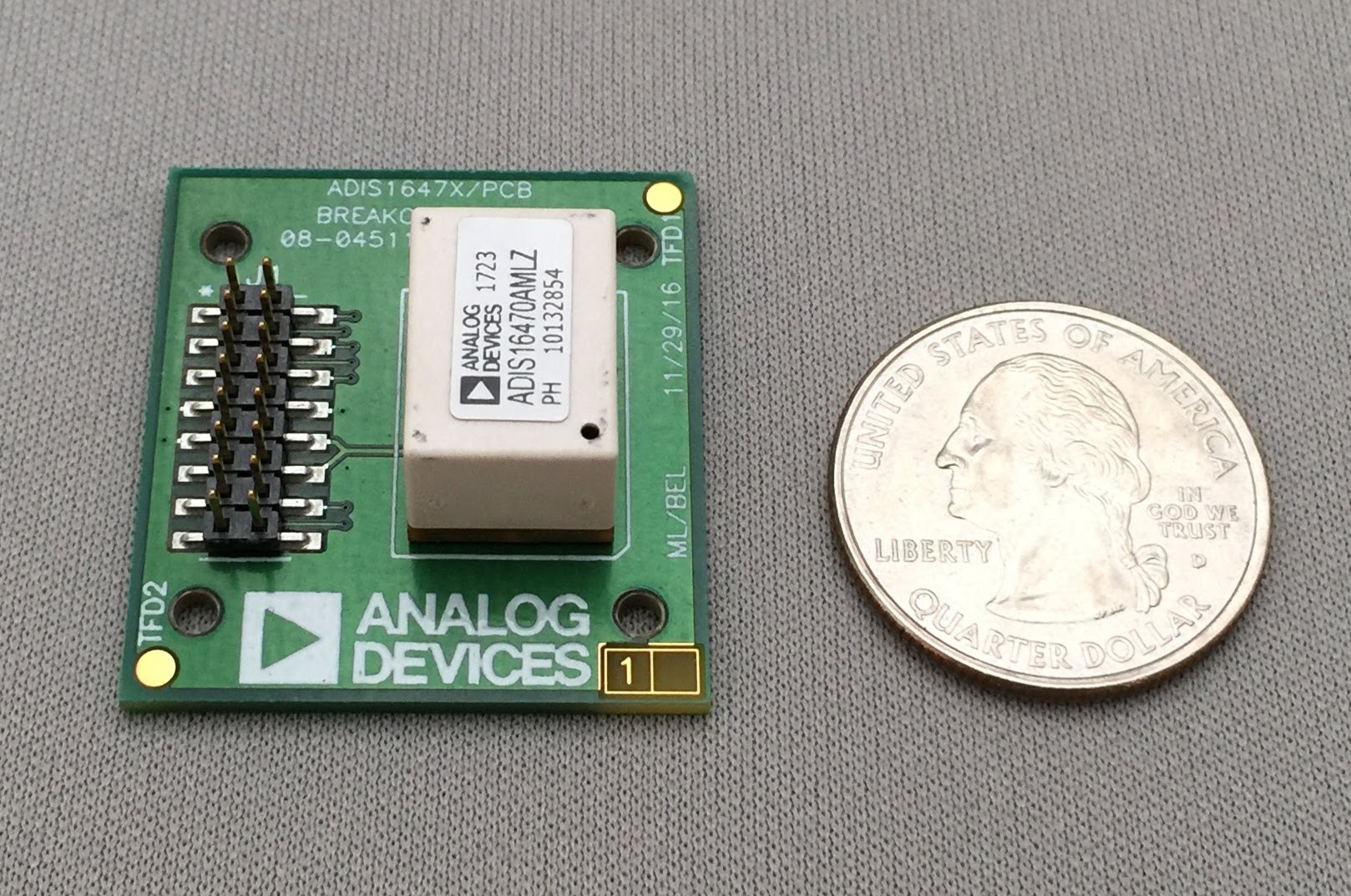

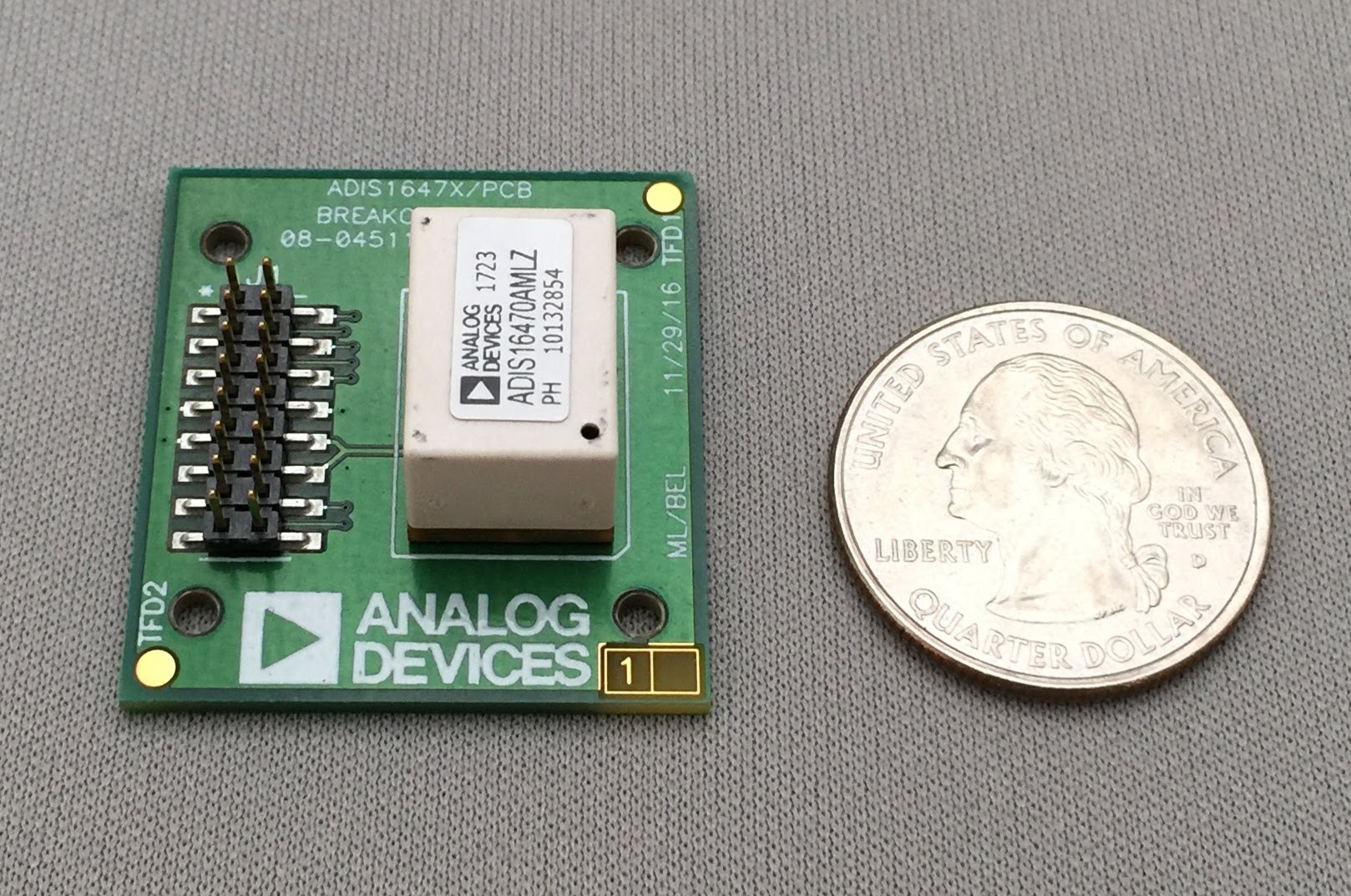

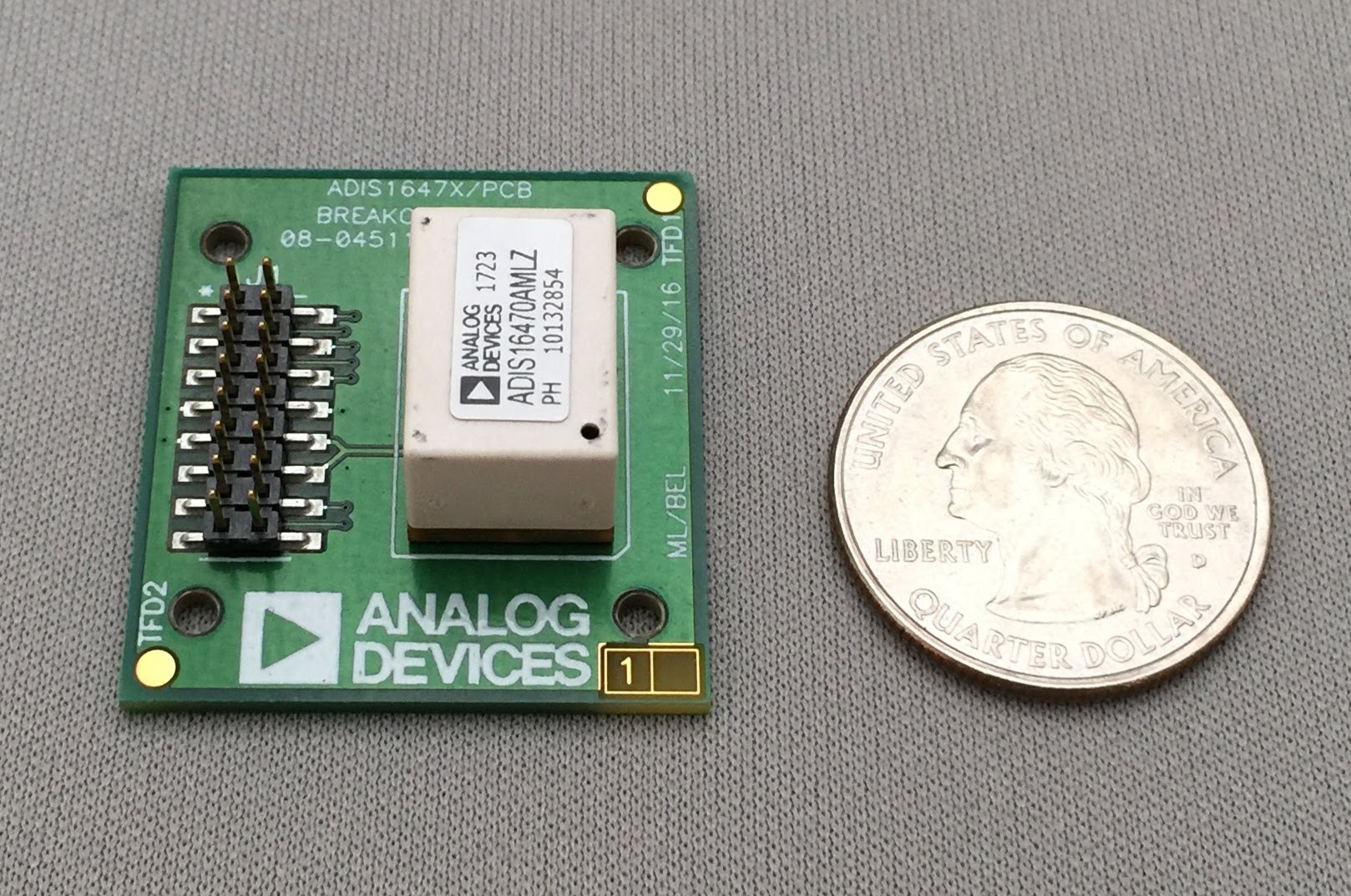

Analog Devices Inc. 9th September Press Release “IMU ADI16470 ROS driver package”

Have you tried using the driver package adi_driver, a new ROS driver package for ADI (Analog Devices Inc.) sensors ?

A press released titled “Released IMU ADI16470 ROS driver package” was issued by the Analog Devices Inc.

http://www.analog.com/jp/about-adi/news-room/press-releases/2018/9-10-2018-ROS%20Driver-ADIS16470.html

There are many inquiries and access to its Github is also active.

Let’s get IMU ADIS 16470 and use it with its ROS package!

GO ROS!

小型で高性能なIMUのブレイクアウトボードです

We partecipated in ROS-I Asia Pacific Workshop!

We participated in the ROS Industrial Asia Pacific Workshop held in Singapore on June 27 and 28. (Sorry, this blog have been a bit out of date waiting for the update of the official page.)

- ROS-Industrial AP Workshop 2018: https://rosindustrial.org/events/2018/6/27/ric-ap-workshop

It is our first participation from TORK. Rather than the technical contents, there were many introductions of projects in each country and efforts by companies, and it was a different impression from ROSCon specialized in ROS package and technology. Instead I was able to know the activities of major overseas companies and venture companies. Also, it was very pleasant to have many opportunities to chat with those people and get acquainted.

TORK also introduced the contents of the activity, the jog_control package and also exhibited the ROS native teaching pendant under development, and many people were interested.

In ARTC there is a very spacious and cool building with the name “Future Factroy”, and many industrial robots were lined up and narrowed down. Demonstration of ARTC ‘s robot team were shown. ROS and the latest industrial robot were combined and research was conducted targeting themes such as Scan-N-Plan and object recognition. Unfortunately it was not allowed to take photos for participants in the workshop, but I think the state will be released following an official blog etc.

In the whole workshop , “everyone has no doubt on the industrial application of ROS” (because it is an event of ROS-I, which is natural). Participants of ROS-I are actually trying to concretely link ROS to application and to strengthen their product capabilities. Companies in China and Taiwan also actively release ROS and ROS 2 products. Although it is certain that there are some challenges in industrial application of ROS, there are efforts such as package creation support by ROSIN, mechanism of code quality management, development of Scan-N-Plan fundamental technology. Most of all, I felt it was wonderful that they loop around making hands move and publish results, which we want to do as TORK.

And the organizer’s operation was wonderful! I would like to express my thanks to the very fun and comfortable workshop with kind attentions.

ROS Workshop Schedule in September and October 2018

Here are the schedule of our ROS workshop series in September and October 2018!

Oct. 2 Tue. 13:30- Introductory

Venue: Nagoya, Aichi

Sep. 12 Wed. 13:30- Introductory

Oct. 3 Wed. 13:30- Introductory

Venue: Yurakucho, Tokyo

Inquiries: info[at]opensource-robotics.tokyo.jp

ROS Workshop for Beginners on 8th August 2018

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

TORK Turns 5!

We’re happy to be celebrating the 5th anniversary of TORK on August 8th, 2018!

Thank you all for your business with us, and understanding toward the opensource robotics.

We will continue to make further efforts to respond to customer’s requests and to contribute to the progress of open source robotics in industry and academia.

Lecture on ROS at RSSR meeting

We gave a lecture on ROS 10 years and future trends at the RSSR regular research meeting.

http://rsi.c.fun.ac.jp:8200/Plone/rssr/meeting/meeting2018-2

We are grateful to have the chance to talk about ROS.

Consultation on business trip workshop, ROS consulting support, and other OSS is also accepted.

Please feel free to contact us.

info[at]opensource-robotics.tokyo.jp

Information on the summer holidays

Thank you very much for your continued patronage of our service.

We will inform you that the following period is a summer holiday at our company.

Summer holidays: August 11 to August 19

For inquiries received during the period of suspensions such as e-mail, we will correspond in sequence from August 20 (Monday).

We apologize for any inconvenience. Thank you for your understanding.

ROS Workshop for Beginners on 25th July 2018

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

ROS Workshop Schedule in August 2018

Here are the schedule of our ROS workshop series in August 2018!

Aug. 7 Tue. 13:30- Introductory

Venue: Nagoya, Aichi

Aug. 8 Wed. 13:30- Introductory

Venue: Yurakucho, Tokyo

Inquiries: info[at]opensource-robotics.tokyo.jp

ROS Workshop Schedule in July 2018

Here are the schedule of our ROS workshop series in July 2018!

Jul. 24 Tue. 13:30- Introductory

Venue: Nagoya, Aichi

Jul. 25 Wed. 13:30- Introductory

Venue: Yurakucho, Tokyo

Inquiries: info[at]opensource-robotics.tokyo.jp

We are heading to ROS-I Asia Pacific Workshop!

TORK will participate in the ROS Industrial Asia Pacific Workshop which will be held in Singapore on 27th and 28th June.

We expect many lectures from ROS-I developers SwRI, Faunhofer IPA, corporate members such as OpenRobotics, ADLINK and Boeing. What kind of story can we hear?

TORK would like to appeal a wide range of activities such as currently developing packages. I will also report on the contents on this blog.

(Notice) ROS advanced seminar

Two months ago we made an announcement for the free ROS seminar. Since then our meeting room has never been vacant almost every Friday with the participants from industry in Japan. We appreciate to receive such an interest toward our seminar, as well as to see the increasing interest in the opensource robotics in Japan! So now we’re preparing for moving on to the next seminar phase; we’re planning to start advanced seminar from October 2014. Contents will include operating original robots by MoveIt! the motion planner. Same as before we’re coordinating in Japanese language but any interest is appreciated toward info[a t]opensource-robotics.tokyo.jp.

“jog_control”: package of jog control for robot arms (1)

ROS and MoveIt! are very powerful tools for the robot arm. With the basic function of ROS and the framework of ros_control , any robot arms can be moved with a unified interface. MoveIt! can easily perform the motion plan of the robot arm in consideration of obstacle avoidance and restraint condition using various algorithms. Looking at the ROS – Industrial repository, many packages are already released for various industrial robot arms operating with ROS and MoveIt!. These functions are a major incentive to use the industrial robot arm with ROS.

However, actually using it, you probably noticed the functions that the industrial robot arms support, while they do not exist in ROS… They are jogging and teaching.

Jogging is a function to move the joints and hands of the robot a little at a time and reach the target robot posture. By jogging, it is possible to move the robot continuously by giving a small amount of displacement of the robot’s joint angle while visually observing the robot. It is an essential function for alignment the hand with the workpiece. The MoveIt! rviz plug-in can specify the target position and orientation of the robot’ s hand with the GUI (Interactive Marker) using the mouse. However, you may notice it is quite difficult to move it as desired with the rviz plug-in while the robot are working in actual environment.

Teaching is a programming-like function that describes the order and condition of the target task after remembering the target robot’s posture (as a very simplified explanation).

On commercially available industrial robots, these functions are usually built in the controller and teaching pendant. You may have a choice to use these functions, however, sometimes ROS robots suffer when we try to teach the robot to do some tasks, even in a simple demo.

The “jog_controll” meta-package adds the function of jog operation to ROS and MoveIt!.

It supports “joint jog” which gives motion of small angle to joint and “frame jog” which gives a small displacement of position and orientation to the target coordinate system to operate.

The “jog_msgs” package is a new message package for giving command values for jog operation. It includes JogJoint.msg giving the displacement amount of the joint jog and JogFrame.msg giving the displacement amount of the frame jog.

The “jog_controller” package is a package that receives these messages and actually moves the robot. Currently, there are jog_joint_node for the joint jog and jog_frame_node for the frame jog. It also includes the rviz panel plug-ins “JogJointPanel” and “JogFramePanel” to give these command values.

With these packages, you will be able to jog the various robot arms, for instance, the UR 5, Denso VS 060, TRA 1, NEXTAGE Open and other robots on ROS-I repositories.

These packages are still under development, functional and verification are still not enough and documentation is also missing. If you are interested, please try it with your own robot and report problems in Issues. We would like to make a better package by receiving your feedbacks.

New efforts are being made on the teaching function. We would like to introduce this in another article.

ROS Workshop Schedule in June 2018

Here are the schedule of our ROS workshop series in June 2018!

Jun. 20 Thu. 13:30- Introductory

Venue: Yurakucho, Tokyo

Jun. 18 Tue. 13:30- Introductory

Venue: Nagoya, Aichi

Inquiries: info[at]opensource-robotics.tokyo.jp

IMU ADIS16470 and adi_driver at the 7th IoT / M2M exhibition will be on display!

Have you tried using the driver package adi_driver, a new ROS driver package for ADI (Analog Devices Inc.) sensors ?

The “IMU ADIS16470 Module” which TORK made the ROS driver package will be exhibited at the 7th IoT / M2M Spring Exhibition!

Dates: May 9 (Wed) – 11 (Fri), 2018

Venue: Tokyo Big Sight, Japan

Booth: W7-48 (Venue A, 1st Floor at West Hall)

IoT/M2M Expo Spring is a long-awaited special exhibition gathering all kinds of IoT (Internet of Things)/M2M related products and services.

A great number of providers and system integrators will visit IoT/M2M Expo Spring to conduct face-to-face business with exhibitors.

Please come to the Booth W7-48 and see the presentation of IMU ADIS16470 !

It is a compact, high performance IMU breakout board

ROS Workshop Schedule in May 2018

Here are the schedule of our ROS workshop series in May 2018!

May 17 Thu. 13:30- Introductory

Venue: Yurakucho, Tokyo

May 22 Tue. 13:30- Introductory

Venue: Nagoya, Aichi

Inquiries: info[at]opensource-robotics.tokyo.jp

Let’s Bloom! – Releasing ROS packages

Do you build packages?

ROS is an open source project, but in

Where do the packages come from?

Regarding the creation and distribution mechanism of packages, the following ROS wiki explains.

It is built on this public build farm (Jenkins server).

A lot of packages will be built one by one as you are watching. For most packages, tests are also run at build time and we are sure that packages will (to a certain extent) work correctly. It will be rebuilt when new packages are released or version upgraded, as well as when dependent packages are updated. Because ROS packages are often dependent on other packages, builds may fail even when other packages are changed. If the build fails, it will be notified by e-mail to the package maintainer and will be resolved. Since the ROS system is very large, such a mechanism is indispensable for achieving consistency.

The built package is placed in the Shadow repository for a while, and it is used for checking the operation etc.

About once a month, it is copied from the Shadow repository to the public repository by an operation called Sync, and ordinary users can acquire it with apt. Sync’s notice will be posted regularly to ROS Discourse, so you may have seen it.

bloom – Tools for release

How can I put my package on this mechanism and have everyone use binary packages? Actually, it is surprisingly easy to do using a tool called bloom. For how to use bloom, please see the ROS wiki,

This time, I tried Japanese translation of bloom ‘s ROS Wiki which seems to be the minimum necessary. Please read it carefully and try to release and maintain packages. I hope that ROS package and ROS maintainers from Japan will increase, though currently it is very small…

If you have something you do not understand, let’s search and ask questions with ROS Answers. As a precaution, the ROS Discourse main body is a forum for discussion, so you should not post questions. However, the Japan User Group of ROS Discourse is supposed to post anything exceptionally, so it is okay to ask questions there. It is OK in Japanese.

TORK also accepts ROS package release and maintenance. If you are interested please contact info [at] opensource-robotics.tokyo.jp.

Low-cost LIDARs!(4) Indoor mesurement

In the previous post, we look at the ROS compatibility. In this post, we’ll compare the data with the video.

Data comparison (indoor)

The video of the measuring in the living room at home is shown below. Considering the use in the robot, the height of sensing was set to 15 cm. I am walking around the sensor.

RPLIDAR A2 (10Hz)

The shape of the room is beautifully reflected, and the walking foot looks like a semicircle.

Sweep (10Hz)

We understand the shape of the room somehow. Although foot is reflected, we do not know the shape.

Sweep (3Hz)

It produces the same resolution as RPLIDAR A2, so you can understand the shape of the room. The shape of the foot is vague and it is hard to understand because the update is slow.

Indoor summary

If it is used indoors, RPLIDAR A 2 seems to be good in terms of angular resolution and distance measurement accuracy. Even with use up to now, RPLIDAR A 2 worked perfectly with indoor SLAM like a normal living room or office.

Next time, we will look at outdoor measurement results.

ROS Workshop for Beginners on 17th April 2018

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

Low-cost LIDARs!(3) ROS package

ROS compatibility

In the previous post, we look into the specification of these two LIDARs.

RPLIDAR A2 and Sweep have their respective SDKs released, which is possible to use data from our own software. However, if you are using it for a robot, no reason not to use ROS. Here we will look at the ROS compatibility.

In both RPLIDAR and Sweep, the ROS driver code has already been created.

Sweep has not been released yet, so it needs build from source.

RPLIDAR has already been released, it can be installed with apt comand

$ sudo apt install ros-kinetic-rplidar-ros

Activation of sensor

Both can easily retrieve the data with the included launch file and see it with rviz.

RPLIDAR A2:

$ roslaunch rplidar_ros view_rplidar.launch

Sweep:

$ roslaunch sweep_ros view_sweep_pc2.launch

Differences in messages

rolidar_ros outputs the sensor_msgs / LaserScan message.

Sweep_ros, on the other hand , outputs sensor_msgs / PointCloud 2 . This is probably because Sweep’s measurement is not synchronized with rotation, as mentioned before. However, by using scan_tools / pointcloud_to_laserscan , PointCloud2 messages can be converted to LaserScan messages.

Displayed in Rviz

I tried visualizing data in the same place.

RPLIDAR A2 is the default setting and you can understand the shape of the room. We also confirmed that it can be used enough for map generation and autonomous movement of robots .

Sweep rotating at the same 10 Hz in the default state is here.

Well, after all, at the same speed, the roughness of Sweep stands out. But it seems to me that the resolution is too low.

I noticed that the default sample rate of the sweep_ros node was half of the maximum specification (1 kHz) at 500 Hz . I would like to have the maximum performance by default, but we can set sample_rate parameter to 1000 and tried again.

The detail level has improved much. However, compared to RPLIDAR A2, the shape of the room is hard to understand.

Continue trying to lower the scan (rotating) speed to 3 Hz.

I can understand the shape of the room this time. The renewal cycle is about three times longer than RPLIDAR A2, as the rotation speed has decreased.

9/14 ROSCon Japan is coming!

ROS Developer Conference ROSCon will be held in Japan this year!

It will be held in Akihabara,Tokyo on September 14 (Friday) the previous week before ROSCon 2018 held in Madrid, Spain. Mr. Brian Gerkey, founder of ROS, will also visit Japan and make a keynote address.

Applications will begin in mid-April for general lectures. Why do not you announce your own ROS project on this occasion?

We are looking forward to it, we would like to contribute to its success!

ROS Workshop Schedule in April 2018

Here are the schedule of our ROS workshop series in April 2018!

Apr. 05 Thu. 13:30- Introductory

Apr. 07 Tue. 13:30- Introductory

Apr. 26 Thu. 13:30- Introductory

Venue: Yurakucho, Tokyo

Apr. 10 Tue. 13:30- Introductory

Apr. 23 Mon. 13:30- Introductory

Venue: Nagoya, Aichi

Inquiries: info[at]opensource-robotics.tokyo.jp

Low-cost LIDARs(2) Specification

Product Specifications

In the previous post, we introduced low-cost LIDARs.

Let’s compare the specifications of the sensors.

It is an important precaution!

- Be sure to check the specifications by yourself when you actually use it!

- TORK can not take responsibility even if your project does not work out due to errors in this article!

Principle

For industrial LIDAR, distance measurement by ToF (Time of Flight) method is often used. This is a method of calculating the distance by measuring the time until the laser light is reflected by the object and returned to the sensor. Sweep uses the ToF method to measure distance. On the other hand, RPLIDAR A2 uses the principle of triangulation instead of ToF. This is a method of measuring the distance to the reflection point by measuring the displacement of the light receiving point with a sensor. The light emitting point, the reflection point and the light receiving point constitute a triangle, I believe you may know already.

Triangulation generally seems easier in general, but as the distance to the reflection point increases, even if reflected light can be measured, the resolution of distance decreases. On the other hand, the ToF can increase the maximum measuring distance because the resolution does not decrease even if the distance to the reflection point increases (as far as the reflected light can be measured).

There is another big difference. For RPLIDAR A2, sampling is done in synchronization with the angle. In other words, the angle of the laser to be measured is the same for each rotation (even if there are delicate variation actually). On the other hand, although Sweep is synchronized with the scan start at the angle 0 position, each distance measurement is not synchronized with the angle. From the specification of Sweep, the data obtained from the sensor is a set of angle and distance. The laser that Sweep emits has a pattern rather than a single pulse, so the sample rate is not constant (default is 500 to 600 Hz), so it seems to be like this.

High-end two-dimensional LIDAR such as URG and SICK is a method that can acquire distance synchronized with angle, so it seems that Sweep’s method is a bit difficult to use.

Angle resolution, measurement cycle, sample rate

As an important performance in LIDAR, there is angle resolution and measurement cycle. The angular resolution is the fineness of the angle of the measurement point. When the resolution is low, the measurement points of the object and the environment are too small, and the shape of the measurement is not well understood. The measurement period is the time taken for one scan (one rotation), and when the measurement cycle is large, accurate measurement becomes difficult when measuring a moving object or when the robot itself moves.

Both of the sensors are measuring the surrounding by rotating the part where the light source and the measurement part are integrated. RPLIDAR A2 has variable rotation speed from 5 Hz to 15 Hz, but the number of measurement points is the same (400 points) regardless of the rotation speed.

Sweep has a variable rotation speed from 1 Hz to 10 Hz, but since the sample rate is 1000 samples / sec at maximum (precisely 1000 to 1025), the faster the rotation speed is, the measurement points per revolution is getting lesser.

- RPLIDAR A2: 400 samples / rotation

- Sweep: 1000 samples / sec

If both are rotating at 10 Hz,

- RPLIDAR a 2: 400 samples / rotation

- Sweep: 100 samples / rotation

Therefore, RPLIDAR A2 has an angular resolution four times higher. If you want to obtain the same resolution with Sweep, you need to rotate the cycle at 1/4 (2.5 Hz).

Maximum measurement distance and distance resolution

Maximum measurement distance is also important performance. It seems better to measure distantly, however, from the experience 10m is enough for office navigation, and 100 m will not be enough for applications like automatic driving. It depends the application.

RPLIDAR A2, confusingly, the measurement distance is different depending on the model. Attention is required for model number when purchasing. This time I used RPLIDAR A2M8 with a measuring distance of up to 8 m.

- RPLIDAR A2M4 Maximum measurement distance 6 m

- RPLIDAR A2M8 Maximum measurement distance 8 m

- RPLIDAR A2M6 Maximum measurement distance 16 m

On the other hand, Sweep has a maximum measurement distance of 40 m.

By the way, the minimum measurement distance, which is the limit of the near side, is about the same.

- RPLIDAR A 2 M 8 minimum measurement distance 0.15 m

- Sweep Minimum measurement distance 0.15 m

How about distance resolution? The distance resolution of RPLIDAR A2M8,

- 0.5 mm or less when 1.5 m or less

- At a distance more than 1.5m, 1% of the distance or less

The nearer the resolution is higher, so the shape of the object can be taken properly if it is close. Instead, it can be predicted that both distance and resolution will fall as the distance increases (data did not exist for errors in specifications).

On the other hand, for Sweep,

- Sweep distance resolution 1cm or less

regardless of the measurement distance. The Sweep specification also includes a graph of the distance measurement error, but you can see that the error does not increase even if the measurement distance becomes longer. It is an advantage of ToF method.

Others

Both are fairly small, but since the top parts rotate, installation is necessary to avoid touching them.

- RPLIDAR A2: diameter 70 mm

- Sweep: Diameter 65 mm

For Sweep, the cable connector is oriented in two directions, one side and the lower side, making it easy to wire. RPLIDAR seems to be difficult to shorten the cable length because the cable is straight out.

When both are connected by USB, they operate with USB bus power. Also, you can communicate with a UART such as a microcomputer, but in that case you need a separate power supply.

Summary

Although it is two sensors whose outline looks the same, you can see that the design philosophy is actually slightly different. It can be said that RPLIDAR A2 is short distance, for indoor use, and Sweep is specification for long distance and outdoor use. I am afraid that angle resolution of Sweep might be low for some usages.

adi_driver: ROS package for ADI sensors is released!

We are happy to announce the release of a new ROS package, adi_driver!

This is a driver package for ADI (Analog Devices Inc.) sensors. Currently, it supports ADIS16470 and ADXL345 (experimental).

It is a compact, high performance IMU breakout board

The ADIS16470 is a brand new product of IMU incorporating 3 axis gyro sensor and 3 axis acceleration sensor. It has remarkably very wide measuring range. The range of angular velocity is ± 2000 [deg / sec] and the range of acceleration is ± 40 [g]. This is enough performance for various robots such as wheeled mobile robots, drone and manipulators.

The price of the sensor alone is $ 199 and the price of the breakout board is 299 dollars. For details, please see the product page.

If you prepare a sensor breakout board and a USB-SPI converter (USB-ISS), you can easily use 3-dimensional posture information with this package. For detailed instructions , please refer to the document on GitHub . If you encounter problems, please report at Issues .

Enjoy!

Low-cost LIDARs!(1) RPLIDAR A2 vs Sweep

Key device for robots – LIDAR

Speaking of autonomous mobile robot’s key device, it is LIDAR (Light Detection and Ranging). This is a sensor that measures the distance to an object by emitting a laser beam (usually an infrared laser) to the surroundings to detect the reflected light hitting the object.

A commonly used product is a two-dimensional product that measures the distance from a sensor on a plane by rotating a single laser beam. For example, it is a product of SICK and Hokyo Electric .

SICK’s LIDAR products

URG series (Hokuyo Denki)

Originally in the study of mobile robots, LIDAR has been used to measure the environment to create maps and autonomous movements. Also, at the 2007 DARPA Urban Challenge , Velodyne provided breakthrough 3D LIDAR solution, causing one breakthrough in automated driving vehicles.

Destructive innovation of LIDAR?

These LIDAR products were originally for industrial use, for example used in factory production equipment and safety equipment. Therefore, it has functions such as ruggedness and waterproofing, but on the other hand it is quite expensive. Regardless of the research and development work, it is not something that can be easily bought by an individual.

Recently, however, low price LIDARs have been on sale in general. Although it is insufficient in performance and reliability for industrial use, it provides sufficient and simple functions for hobby and research. Personal opinion, I think that it is possibly “destructive innovation” -like product and will be used for applications that we do not expect in the future.

This time, two of them,

I will introduce these two sensors while comparing them.

Say hello to Hiro at Shinshu Univ. !

Yamazaki Laboratory students

One day in February, I visited Shinshu University Yamazaki laboratory . We introduced recent activities of TORK, the latest information on ROS, and exchanged opinions. While listening diligently, a lot of questions came out.

Hiro with a trolley

We can see two Hiros in Yamazaki laboratory, and we checked the functions of MoveIt! recently added together and answered the usage questions as much as possible.

In the Yamazaki laboratory, they are doing research to deal with flexible materials made of cloth like towels and shirts. We are looking forward to more dexterous robots.

Dr. Yamazaki and all the students in the laboratory, thank you!

New aibo has arrived!

Sony’s pet robot “aibo” has arrived in TORK!

We named it “TORK-chan (♀)”.

They announced “ROS are mounted in aibo”, and it seems real. Cool!

aibo まじでros-kineticだった.https://t.co/V1FlT3He9l

衝撃

— OTL (@OTL) January 11, 2018

You can see in the following pages the list and licensing of open source software that are installed.

In addition, we can now download the open source codes from here.

While we examined a little, we can not find the way to access the aibo system from the user. We can expect some SDKs will be published in near future. Until the time being, let’s enjoy this cute pet robot!

We’ve visited THK Co., Ltd.!

We have visited THK CO., LTD. and told about robots’ ROS support.

This robot also works with ROS!

It can be purchased by order-to-order production method.

Please refer to the following link if you are interested.

Contact: SEED Solutions

We also accept ROS introduction, ROS package development and releases method, and OSS consultations.

Please do not hesitate to contact us!

info [at] opensource-robotics.tokyo.jp

ROS Workshop Schedule in November 2017

Here are the schedule of our ROS workshop series in Autumn 2017!

Nov. 07 Tue. 13:30- Introductory

Nov. 15 Wed. 13:30- Introductory

Nov. 30 Thu. 13:30- Introductory

Venue: Yurakucho, Tokyo

Nov. 14 Tue. 13:30- Introductory

Nov. 20 Mon. 13:30- Introductory

Nov. 28 Tue. 13:30- Introductory

Venue: Nagoya, Aichi

Inquiries: info[at]opensource-robotics.tokyo.jp

Interactive ROS Indigo environment with Docker (1)

Indigo? Kinetic?

ROS will continue to be newly released. The latest version is Lunar Loggerhead (Lunar) and the previous, Kinetic Kame (Kinetic) and Jade Turtle (Jade). Traditionally, they named after turtles.

However, I think still many people are actually using the previous version Indigo Igloo (Indigo). There are many Indigo packages that have not yet been released in Kinetic. For the same reason, even TORK is currently using the Indigo in our workshop materials and contract development .

Indigo is based on Ubuntu14.04 while Kinetic and Lunar is based on Ubuntu16.04 or later. We feel a bit outdated to install Ubuntu14.04 into our PC only in order to use the Indigo.

It could be a solution to use virtual machine (VM), such as VMWare or VirtualBox. However, it can be a problem when you try to use the hardware. For example, there are often device driver problem such as graphics performance, USB camera compatibility, not compatible with USB3.0 and so forth.

Docker can be the remedy for this issue. You can work easily in the interactive Indigo development environment using devices and GUI while you installed the Ubuntu16.04 on your PC.

Installation of Docker

First install Docker. Let’s install the latest version according to the instructions in the pages of the Docker. Use of that Community Edition (CE).

Preparation of Indigo of Docker image

OSRF prepares the Docker container of Indigo on DockerHub.

Get the Indigo of the container with the following command.

$ docker pull osrf/trusty-ros-indigo:desktop

By the way, TORK also publishes the Indigo Docker container. It contains additional packages for the TORK workshops, Nextage Open and other useful tools. You may also find it here.

For example, if you want to check the version by the command ‘lsb_release’,

$ docker run osrf/trusty-ros-indigo:desktop lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 14.04

LTSRelease: 14.04

Codename: trusty

User permissions of execution will be root by default. To change the user rights and the access rights to the devices, you need to set the options at the time of the separate docker run. For more information around here, Please refer to Docker Run Reference.

Interactive start-up that share user and home directory

Here we want to run the shell with their own user rights, and to work interactively in the home directory. We created a script to do some of the settings that are required for that.

$ ./docker_shell osrf/trusty-ros-indigo:desktop

After that, you are on the shell as if it’s OS has become Trusty + ROS Indigo.

$. /opt/ros/indigo/setup.bash

You can use the shell like normal Indigo environment. You must be careful the home directory is shared. If you are using the Kinetic in the base system, you can easily get confused whether your workspace is for Kinetic or Indigo.

If you are using the NVidia driver

If the PC is not using a special X graphics driver, you can also use GUI, such as rviz. But if the PC is using the NVidia driver, it does not work apps, including OpenGL, such as rviz. This solution will introduce the next time.

Successful World MoveIt! Day Tokyo!

MoveIt World! Day Tokyo ended successfully. On the day we had about 30 people. We would like to thank everyone who participated, sponsored, and supported.

Nihon Binary kindly sponsored the robot Baxter. It stood in the fashionable LEAGUE Yurakucho of the floor.

In addition, Nihon Binary exhibited Jaco2 arm and HEBI arm. Some experts tackled them to make them move with MoveIt! and Gazebo.

Enjoying the hackathon on World MoveIt! Day. Thanks @tokyo_os_robot for sharing your day @moveitrobot @ROSIndustrial @rosorg @OSRFoundation pic.twitter.com/A9e4LGv7xG

— ROS-Industrial_AP (@ROSIndustrialAP) October 19, 2017

ARTC in Singapore, the another WMD host, stayed connected in a video chat.

Everyone tried MoveIt! simulators and robot while taking a snack. The trouble of installation and operation has been supported by the staff as possible.

Some people actually moved Baxter and challenged programming with moveit_commander. Meaningful results were obtained according to their level and concern. At the end some people presented the results of today.

In Japan, we have found that many people have an interest in MoveIt!. We will continue using the MoveIt! and ROS with everyone, let’s improve it with feedbacks and commits.

World MoveIt! Day 2017 Tokyo is incoming!

MoveIt! is one of the main major package of ROS intended for the robot manipulator. A local events of “World MoveIt! Day 2017” for all developers and users, will be held in Tokyo this year.

- Title: World MoveIt! Day Tokyo

- Date and time: October 19 (Thursday) 11:00 to 18:00 (The date is differ from other western region)

- Location: [ LEAGUE Yurakucho ] event space

- Admission: Free

For detailed program and registration, please visit the following site. The schedule is possible to change without notice.

It is to be a very ‘loose feeling’ event. Anyone can join those who are using MoveIt!, who do not yet use it and who do not heard it at all.

If it is possible to bring your own notebook PC where you installed the ROS, you can experience MoveIt! with the simulator or actual robot. You can learn how to use it while our staff and participants can teach each other.

In addition, it also carried out exchange of information on additional functions or bug fixes of MoveIt! itself. Let everyone will boost the MoveIt! in!

New tutorial for Nextage Open has published in Japanese !

Comprehensive tutorial on ROS package for Nextage Open is available, but it is provided in English for the users around the world. Now we just have published Japanese version of the tutorial, requested from many Japanese users.

We hope it can serve you in the research and development field. In addition, we think it can be helpful for those who are considering to introduce Nextage Open system.

Of course, English version of the tutorial ( Http://Wiki.Ros.Org/rtmros_nextage/Tutorials ) is also available as before (link to the Japanese version has also been added).

ROS Workshop Schedule in October 2017

Here are the schedule of our ROS workshop series in Autumn 2017!

Oct. 04 Wed. 13:30- Introductory

Oct. 18 Wed. 13:30- Introductory

Oct. 24 Tue. 13:30- Introductory

Venue: Yurakucho, Tokyo

Oct. 10 Thu. 13:30- Introductory

Oct. 23 Mon. 13:30- Introductory

Venue: Nagoya, Aichi

Inquiries: info[at]opensource-robotics.tokyo.jp

ROS Workshop for beginners in 27th September !

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

The workshop schedule of October is now open!

in October, ROS Workshop Intermediate Course (Navigation) is also open!

It’s blog for beginners studying ROS for the first time. Please refer to it.

Private workshops, other consultations on OSS are also accepted.

Please do not hesitate to contact us!

info [at] opensource-robotics.tokyo.jp

We participated in ROSCon2017, Vancouver!

We took part in ROSCon2017, held in Vancouver in September 21 and 22!

ROSCon is a meeting for the exchange of information once a year, by gathered people involved in ROS in a variety of positions such as users, developers, universities, companies.

Dr. Brian Gerkey opened the session

TORK did not sign up for the presentation in advance, however, we were able to report our activities in the three minutes lightning talk.

There were many presentations from very wide variety of countries and organizations on new robots, new devices, robotics research and development. In addition, this time we could hear many topics of ROS2.

It is anyway so fun conference for developers and users of ROS, why not consider the participation of the future. The venue and the schedule for next year has not yet been determined but should coming soon.

ROS + Snappy Ubuntu Core (4) : Ubuntu Core install on Raspberry Pi 3

We had investigated the inside of the Snappy package in the last post.

Following the contents on this page, install Ubuntu Core on Raspberry Pi 3.

The following work was done in the environment of Ubuntu 16.04. First, download the ‘Ubuntu Core 16 image for Raspberry Pi 3’ image file in Ubuntu Core image. It is 320 MB.

Next I will copy this to the MicroSD card. In my environment, the MicroSD card was recognized as /dev/sda.

$ xzcat ubuntu-core-16-pi3.img.xz | sudo dd of=/dev/sda bs=32M $ sync

Launch RaspberryPi3 with the MicorSD card.

When you first start up, you need to log in from the console, so connect the keyboard and display.

Oops. It tell me to enter the email address of the login.ubuntu.com account. I need to register ubuntu.com account to use Ubuntu Core. Internet connection is the prerequisite for installation, so it seems to be a problem in the proxy environment.

Please access login.ubuntu.com from another PC and create an account.

Furthermore, it is said that there is no ssh key. It is necessary to set ssh’s public key on the ssh page of login.ubuntu.com. With authentication with this private key corresponding to this public key, you can login to Raspberry Pi 3 with ssh.

If your machine is Ubuntu, type:

$ ssh-keygen

~/.ssh/id_rsa.pub should be created, copy its contents and paste it on the form of SSH Keys.

From the machine on which you set the ssh key, you will be able to log in with ssh to Raspberry Pi. The username is the user name set in ubuntu.com. IP address of the Raspberry Pi3 is, since it is obtained by DHCP, can checked on the console.

$ ssh username@192.168.0.10

In the initial state, it does not use familiar apt and dpkg command in Ubuntu. In the future, we will continue to expand by putting snappy package, let’s first try to install the snapweb package to see the package list in the web browser.

$ sudo snap login username@your_address.com $ sudo snap install snapweb $ snap list Name Version Rev Developer Notes core 16-2 1443 canonical - pi2-kernel 4.4.0-1030-3 22 canonical - pi3 16.04-0.5 6 canonical - snapweb 0.26-10 305 canonical -

In the Web browser, try to access “http://192.168.0.10:4200/”.

Package list is or looks that are installed now. It seems there is also a store,

Well, I do not know well, it looks like there are a few apps.

ROS Workshop for Beginners on 30th August

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

ROS Workshop in Nagoya is started

Here are the schedule of our ROS workshop in Nagoya!

September 5 Tue. 13:30- Introductory

September 15 Fri. 13:30- Introductory

Venue: Fushimi, Nagoya

Inquiries: info[at]opensource-robotics.tokyo.jp

ROS Workshop Schedule from September to October 2017

Here are the schedule of our ROS workshop series in Autumn 2017!

Sept. 07 Wed. 13:30- Introductory

Sept. 27 Fri. 13:30- Introductory

Oct. 04 Wed. 13:30- Introductory

Oct. 18 Wed. 13:30- Introductory

Oct. 24 Tue. 13:30- Introductory

Venue: Yurakucho, Tokyo

Sept. 05 Thu. 13:30- Introductory

Sept. 15 Tue. 13:30- Introductory

Venue: Nagoya, Aichi

Inquiries: info[at]opensource-robotics.tokyo.jp

ROS + Snappy Ubuntu Core (3) : Looking into the Snappy package

How does the Snappy package, we created in the last blog post works?

$ which talker-listener.listener /snap/bin/talker-listener.listener

$ ls -l /snap/bin/talker-listener.listener lrwxrwxrwx 1 root root 13 Jul 21 16:04 /snap/bin/talker-listener.listener -> /usr/bin/snap

This seems to be equivalent to doing the following.

$ snap run talker-listener.listener

The snap file is simply expanded to /snap/. The directory ‘current’ should the latest version, but this is a symbolic link to ‘x1’. It will be easy to roll back.

$ ls -l /snap/talker-listener/ total 0 lrwxrwxrwx 1 root root 2 Jul 21 16:04 current -> x1 drwxrwxr-x 10 root root 222 Jul 21 16:00 x1

$ ls /snap/talker-listener/current bin command-roscore.wrapper etc meta snap var command-listener.wrapper command-talker.wrapper lib opt usr

I see the command-listener.wrapper. Looking inside,

#!/bin/sh

export PATH="$SNAP/usr/sbin:$SNAP/usr/bin:$SNAP/sbin:$SNAP/bin:$PATH"

export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:$SNAP/lib:$SNAP/usr/lib:$SNAP/lib/x86_64-linux-gnu:$SNAP/usr/lib/x86_64-linux-gnu"

export ROS_MASTER_URI=http://localhost:11311

export ROS_HOME=${SNAP_USER_DATA:-/tmp}/ros

export LC_ALL=C.UTF-8

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$SNAP/lib:$SNAP/usr/lib:$SNAP/lib/x86_64-linux-gnu:$SNAP/usr/lib/x86_64-linux-gnu

export PYTHONPATH=$SNAP/usr/lib/python2.7/dist-packages:$PYTHONPATH

export PATH=$PATH:$SNAP/usr/bin

# Shell quote arbitrary string by replacing every occurrence of '

# with ''', then put ' at the beginning and end of the string.

# Prepare yourself, fun regex ahead.

quote()

{

for i; do

printf %s\n "$i" | sed "s/'/'\\''/g;1s/^/'/;$s/$/' \\/"

done

echo " "

}

BACKUP_ARGS=$(quote "$@")

set --

if [ -f $SNAP/opt/ros/kinetic/setup.sh ]; then

_CATKIN_SETUP_DIR=$SNAP/opt/ros/kinetic . $SNAP/opt/ros/kinetic/setup.sh

fi

eval "set -- $BACKUP_ARGS"

export LD_LIBRARY_PATH="$SNAP/opt/ros/kinetic/lib:$SNAP/usr/lib:$SNAP/usr/lib/x86_64-linux-gnu:$LD_LIBRARY_PATH"

export LD_LIBRARY_PATH=$SNAP_LIBRARY_PATH:$LD_LIBRARY_PATH

exec "rosrun" roscpp_tutorials listener "$@"

It seems that the command is executed after ROS environment setting etc are done with this wrapper file. In other words, you do not need “source setup.bash” which you always do with ROS. It seems to be easier.

What else is included in the snap package?

$ tree -L 3 -d /snap/talker-listener/current/

/snap/talker-listener/current/

├── bin

├── etc

│ ├── ca-certificates

│ │ └── update.d

│ ├── dpkg

│ │ └── dpkg.cfg.d

│ ├── emacs

│ │ └── site-start.d

│ ├── gss

│ │ └── mech.d

│ ├── ldap

│ ├── openmpi

│ ├── perl

│ │ ├── CPAN

│ │ └── Net

│ ├── python2.7

│ ├── python3.5

│ ├── sgml

│ ├── ssl

│ │ ├── certs

│ │ └── private

│ ├── systemd

│ │ └── system

│ └── xml

├── lib

│ └── x86_64-linux-gnu

├── meta

│ └── gui

├── opt

│ └── ros

│ └── kinetic

├── snap

├── usr

│ ├── bin

│ ├── include

│ │ ├── apr-1.0

│ │ ├── arpa

│ │ ├── asm-generic

│ │ ├── boost

│ │ ├── c++

│ │ ├── console_bridge

│ │ ├── drm

│ │ ├── gtest

│ │ ├── hwloc

│ │ ├── infiniband

│ │ ├── libltdl

│ │ ├── linux

│ │ ├── log4cxx

│ │ ├── misc

│ │ ├── mtd

│ │ ├── net

│ │ ├── netash

│ │ ├── netatalk

│ │ ├── netax25

│ │ ├── neteconet

│ │ ├── netinet

│ │ ├── netipx

│ │ ├── netiucv

│ │ ├── netpacket

│ │ ├── netrom

│ │ ├── netrose

│ │ ├── nfs

│ │ ├── numpy -> ../lib/python2.7/dist-packages/numpy/core/include/numpy

│ │ ├── openmpi -> ../lib/openmpi/include

│ │ ├── protocols

│ │ ├── python2.7

│ │ ├── rdma

│ │ ├── rpc

│ │ ├── rpcsvc

│ │ ├── scsi

│ │ ├── sound

│ │ ├── uapi

│ │ ├── uuid

│ │ ├── video

│ │ ├── x86_64-linux-gnu

│ │ └── xen

│ ├── lib

│ │ ├── compat-ld

│ │ ├── dpkg

│ │ ├── emacsen-common

│ │ ├── gcc

│ │ ├── gold-ld

│ │ ├── lapack

│ │ ├── ldscripts

│ │ ├── libblas

│ │ ├── mime

│ │ ├── openmpi

│ │ ├── pkgconfig

│ │ ├── python2.7

│ │ ├── python3

│ │ ├── python3.5

│ │ ├── sasl2

│ │ ├── sbcl

│ │ ├── ssl

│ │ ├── valgrind

│ │ └── x86_64-linux-gnu

│ ├── sbin

│ ├── share

│ │ ├── aclocal

│ │ ├── applications

│ │ ├── apps

│ │ ├── apr-1.0

│ │ ├── bash-completion

│ │ ├── binfmts

│ │ ├── boostbook

│ │ ├── boost-build

│ │ ├── bug

│ │ ├── ca-certificates

│ │ ├── cmake-3.5

│ │ ├── debhelper

│ │ ├── dh-python

│ │ ├── distro-info

│ │ ├── doc

│ │ ├── doc-base

│ │ ├── docutils

│ │ ├── dpkg

│ │ ├── emacs

│ │ ├── glib-2.0

│ │ ├── icu

│ │ ├── libtool

│ │ ├── lintian

│ │ ├── man

│ │ ├── mime

│ │ ├── mpi-default-dev

│ │ ├── numpy

│ │ ├── openmpi

│ │ ├── perl

│ │ ├── perl5

│ │ ├── pixmaps

│ │ ├── pkgconfig

│ │ ├── pyshared

│ │ ├── python

│ │ ├── python3

│ │ ├── sgml

│ │ ├── sgml-base

│ │ ├── xml

│ │ └── xml-core

│ └── src

│ └── gtest

└── var

└── lib

├── sgml-base

├── systemd

└── xml-core

144 directories

Wow!

The talker-listener ‘s snap package contains the entire directory structure of the required Linux system as well as ROS. It feels a little overkill… But this is nowadays popular as Docker and other do, so-called container virtualization.

By the way, the generated snap file,

$ ls -sh talker-listener_0.1_amd64.snap 154M talker-listener_0.1_amd64.snap

There are also 154 Mbyte! Is it acceptable when the package increases?

There are probably ways to solve this because the package contains a lot of unnecessary files.

In the next post, we’ll show how to install Ubuntu Core into the Raspberry Pi 3 machine.

Roomblock(5) : 3D Printable Frame Structure

Roomblock has Raspberry Pi, Mobile battery and RPLIDAR on a Roomba as described in the previous post. We need to a frame structure to make them bind.

The frame is a extendable shelf-like structure. It consists of the first battery stage, second Raspberry Pi stage, and the top RPLIDAR stage.

We named this robot Roomblock, because it is extendable like “blocks”.

We publish the STL file for 3D printing, so that you can print it out with your own 3D printer. Please check the Thingiverse site.

We share how to build Roomblock at Instructables. Please try it at your own risk.

ROS Workshop for Beginners on 8th August

TORK has just passed 4th annual mark on August 8th, 2017.

Thank you all for your business with us, and understanding toward the opensource robotics.

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

ROS Workshop for Beginners on 2nd August

We had ROS Workshop at Yurakucho, Tokyo.

We used custom ROS-preinstalled LiveUSB, so people had ROS experience without change your PC environment.

All of attendee ran through the all topics.

Great work, everyone!!

ROS + Snappy Ubuntu Core (2) : Let’s make it Snappy!

In the previous blog, I introduced about Ubuntu Core. Ubuntu Core is using Snappy as a package system, however, so-called “Snappy” packaging system can be tried without installing Ubuntu Core. This time, I will try to make “Snappy” ROS package on ordinary Ubuntu.

Here is a tutorial on how to make a Snappy ROS package. Let’s follow this street for the time being.

Before doing this we recommend you to read through the Snapcraft Tour tutorials.

The following procedure is what I tried with Ubuntu 16.04 + ROS Kinetic. First, install Snappcraft which is a Snappy packaging tool.

$ sudo apt install snapcraft

I need the ROS package to make it a Snappy package, but it is a pain to type in my own code, so I clone the ros_tutorial source code from github.

$ mkdir -p catkin_ws / src $ cd ~/catkin_ws/src $ git clone https://github.com/ros/ros_tutorials.git

Initialize with snapcraft.

$ cd ~/catkin_ws $ snapcraft init

A directory named snap is created and a file named snapcraft.yaml is created below it. Rewrite this file to include the binaries in roscpp_tutorials. Refer to the tutorial for explanation as to how to rewrite it. I think that you can understand in the atmosphere.

Well, finally I will create a package.

$ cd ~/catkin_ws $ snapcraft

I started downloading various things from the network … snapcraft can understand the catkin workspace, so it looks at package.xml and download the necessary packages with rosdep.

Perhaps you think “All the necessary ROS packages are already in the system!” Because the Snappy makes all the necessary items into the virtual container as a snappy package. It downloads the packages, compiles it from source code, and installs it in the virtual container, every time its build.

After a while, the processing is over,

Snapped talker-listener_0.1 _ amd64.snap

A snap file is generated.

To install it,

$ sudo snap install --dangerous publisher-subscriber_0.1 _ amd64.snap

will do. Let’s see if it is installed.

$ snap list Name Version Rev Developer Notes Core 16-2.26.9 2381 canonical - Talker-listener 0.1 x 1 -

Let’s start the nodes in turn.

$ talker-listener.roscore $ talker-listener.talker $ talker-listener.listener

You can see familiar nodes of talker-listener began to proceed.

In the next post, let’s look into the snappy package structure.

Amazing montage video incl. NEXTAGE OPEN celebrates MoveIt! 5-year

MoveIt!, de-facto standard motion planning library for ROS, now celebrates 5th year since its initial release by an amazing compilation of application videos.

This is the 2nd time MoveIt! maintenance team makes such a montage. Comparing with the one from 4 years ago back in 2013 soon after the software was just released, we can see many more Pick&Place applications this time.

Also captured my personal interest was that there are some mobile base/subsea rover manipulation apps, which is one of the future improvement items of MoveIt! (see this page “Mobile base integration”). It’d be absolutely a great contribution if the developers of those apps would give back their development to the upstream MoveIt! software.

As has always been, NEXTAGE Open, a dual-arm robot that TORK has been actively contributing to its maintenance and providing support service, appears in the video as well thanks to a Spanish system integrator Tecnalia presumably for their work with Airbus.

TORK has been a motivated, skillful supporter of ROS and MoveIt! since our launch in 2013. If you’re wondering how you could employ MoveIt! to your robot, please consider our hands-on workshop series too.

P.S. List of all application’s developers are also available as follows:

(0:06) Delft Robotics and TU Delft Robotics Institute

(0:09) Techman Robot Inc.

(0:13) Correll Lab, CU Boulder

(0:37) Nuclear & Applied Robotics Group, Unv Texas

(0:50) Beta Robots

(0:55) GIRONA UNDERWATER VISION AND ROBOTICS

(1:03) Team VIGIR

(1:34) Honeybee Robotics

(1:49) ROBOTIS

(1:58) TECNALIA

(2:05) Correll Lab, CU Boulder

(2:26) TODO Driving under green blocks

(2:38) ROBOTIS

(2:54) Fetch Robotics

(3:05) Hochschule Ravensburg-Weingarten

(3:12) TU Darmstadt and Taurob GmbH – Team ARGONAUTS

(3:20) isys vision

(3:27) Technical Aspects of Multimodal System Group / Hamburg University

(3:33) Clearpath Robotics

(3:43) Shadow Robot

ROS + Snappy Ubuntu Core (1) : What is it?

What are the challenges to introduce the market infrastructure of applications such as iPhone’s AppStore and Android’s Google Plain into the world of robots? ROS has been looking into the “Robot App Store” since its inception, but it has not yet been realized.

Among them, the recent appearance of a mechanism called Ubuntu Snappy Core seems to greatly contribute to the opening of the Robot App Store.

I’d like to write about Ubuntu Snappy Core and ROS in the several future entries.

The circumstances of Ubuntu

Ubuntu, on which ROS depends as the main base operating system, has two Regular releases twice a year, and its support period is nine months. There is also a release called LTS (Long Term Support) every two years, and the support period is five years. People seeking practical use continue to use the stable LTS while adopting the latest technology and using the Regular release for the new development.

However, devices targeted by Ubuntu are spreading not only to desktop PCs and servers but also to edge devices such as IoT and routers. Unfortunately, Ubuntu Phone would not come out…

For these devices, from the security point of view, it is not a synchronous one like Ubuntu, but continuous updates of more irregular and finer intervals are indispensable. In addition, fault tolerance, such as rolling back when software containing defects is delivered, is required.

Snappy Ubuntu Core

In response to these demands, Ubuntu has developed a mechanism called (Snappy) Ubuntu Core for IoT and edge devices.

This is to separate the OS and device drivers, separate the kernel and the application, and make it possible to update each independently, in a fine cycle.

The circumstances of ROS

Robots can also be seen as a kind of IoT and edge devices, so in the future ROS, this Snappy package system may become mainstream. Also, the release system of ROS seems to be getting flawed.

Until now, ROS has done synchronous releases like Ubuntu. However, with the release once a year, it seems too late to adopt the technologies of moving forward. On the other hand, when using ROS for business, priority is given to operation, and it tends to become conservative not to update frequently.

Also, the ROS package depends on many external libraries. Every time the API of the external library is changed, the package of ROS needs to correspond to it. When the specification changes, it is necessary to check the operation after matching the package.

Therefore, every time it is released, more packages are coming off the release. Despite being a necessity and commonly used package, there are cases in which it is not released due to the fact that there is no maintainer to perform the corrective operation, although the fix is necessary for release, or the release is delayed.

If you think about selling robot products with ROS, at the timing of Ubuntu or ROS update, you do not know what kind of specification change or malfunction will be mixed, and it takes huge resources to deal with it.

Based on the above, we anticipate that Snappy ROS systems will become mainstream in the future.

It is reliable that a robot engineer working for Canonical (Mr. Kyle Fazzari) is vigorously disseminating information. The following series of blogs and videos released in April are also must-see.

In the next post, we’ll look into how to create Snappy based ROS package.

Roomblock(4): Low Cost LIDAR: RPLIDAR A2

In the previous post, we explained the Raspberry Pi, which communicate with Roomba.

Roomblock is using a low cost RPLIDAR A2.

This is very easy to use, and low cost.

Range data in rviz

This sensor is ROS-ready. You can view the data by typing one command line.

Scan matching

laser_scan_matcher package help you to estimate sensor’s motion only from the laser scan data by scan matching.

In the next post, we’ll show the 3D Printable frame structure for Roomblock.

We share how to build Roomblock at Instructables. Please try it at your own risk.

Roomblock: Autonomous Robot using Roomba, Raspberry Pi, and RPLIDAR(3)

In the previous post, we introduced ROI connector to communicate with Roomba. Roomblock is controlled by a Raspberry Pi. As you know, Raspberry Pi is low cost ARM based board computer. We use Raspberry Pi 2, Ubuntu and ROS are installed on it.

Raspberry Pi 2

The ROI connector on the Roomba and Roomblock are connected with a USB-serial adapter cable.

USB-Serial adapter cable

In the next post, we will explain LIDAR for Roomblock.

We share how to build Roomblock at Instructables. Please try it at your own risk.

Roomblock: Autonomous Robot using Roomba, Raspberry Pi, and RPLIDAR (2)

You can use Roomba 500, 600, 700 and 800 series as a base of Roomblock,which we introduced in the previous post. They have a serial port to communicate with external computers. Notice the flagship model Roomba 900 series have no serial port, so you cannot use it.

You can see the serial port as the picture shows. Be careful not to cut your fingers or break your Roomba!

Roomba 500 series ROI connector

Roomba 700 series ROI connector

In the next post, we will show how to connect Raspberry Pi computer and Roomba.

We share how to build Roomblock at Instructables. Please try it at your own risk.

Mapping Experiment in a Large Building

We did very loose evaluation of ROS mapping packages with a relatively large scale building including loop closure. The loop closure is difficult issue for mapping, and it shows some package can close the loop even with the default parameters.

Generated map(from left, gmapping, slam_karto, hector_slam, cartographer)

Notice, this result doesn’t compare true efficiency of each algorithm. We just use nearly default parameters for each package. We recommend you to select mapping packages for your problem by yourself. ROS is suitable for doing it.

Roomblock: Autonomous Robot using Roomba, Raspberry Pi, and RPLIDAR(1)

We are using a robot named “Roomblock” as a material of ROS workshop. Roomba is wonderful not only for room cleaning, but also for learning robotics. Roomba has an serial port to communicate with PC, and of course, ROS system.

You can convince your family to buy a Roomba, of course for cleaning 🙂

The first Roomba I bought in 2007, broken now 🙁

In the next post, we’ll show more about the Serial Port for Roomba to communicate with PC.

We share how to build Roomblock at Instructables. Please try it at your own risk.

ROS USB camera driver for Kinetic

A ROS USB camera driver for Kinetic which was a long pending problem was released!

Although these packages was used in Indigo a lot, recently the maintainer was absent, and it was not possible to use it in Kinetic.

A voluntary group named Orphaned Package Maintainers is launched and a system has been established to be released in the transition to Kinetic.

In addition to this it has become possible to freely select from the following six additional drivers. Enjoy ROS-CV Programming.

Autonomous Navigation Demo and Experiments at Meijo Univ.

We had a chance to perform a demo and experiments of our autonomous navigation robot at Meijo University, Nagoya, Aichi. Our Roomba based autonomous robot “Roomblock” could impress the students. ROS would help them to accelerate their study.

And we can do mapping experiments with the robot in a beautiful campus building. We are going to report the result in following posts.

Updated MoveIt! Now Comes with Trajectory Introspection

Through the package update earlier June 2017, MoveIt! now allows you to introspect planned trajectory pose by pose.

Using the newly added trajectory slider on RViz, you can visually evaluate the waypoints in a planned trajectory on MoveIt!.

As you see on the slider on the left side, you can now introspect each waypoint in a planned trajectory on MoveIt! on NEXTAGE Open.

See MoveIt! tutorial for how to activate this feature.

New Generation Mobile Robot,TurtleBot3!

We had meeting with Mr. Shibata and Ms. Morinaga, ROBOTIS Japan, at Yurakucho,Tokyo.

Did you check the TurtleBot3, yet ?

TB3 will be released on this summer. We can’t wait for it!

Let’s visit the following website for details!

http://www.turtlebot.com/

http://turtlebot3.robotis.com/en/latest/

https://github.com/ROBOTIS-GIT/turtlebot3

Thank you for visiting our office today!

Impedance Control using Kawada’s Hironx Dual-arm Robot

Here’s an advanced usecase of NEXTAGE Open (Hironx) robot.

A robot at Manipulation Research Group at AIST (Japan) is equipped with JR3 6-axis force sensor at the tip of the arms. Using the external force measurement from the sensor, impedance control is achieved to restrain the pose of the robot’s end-effector.

Interested users can take a look at this wiki page for how to configure your driver. Technically you can use other types of force sensors.

Need customization? Please contact us.

NEXTAGE OPEN software services progress

With TORK turning 2-year mark on August 8th, 2015, we give an update about the NEXTAGE OPEN’s ROS-based opensource software support, which is one of our first business services.

We’ve got 74 support requests so far from some of the support service users. Each request is answered, or fixed by us often based on the collaboration with the opensource community developers.

User’s case: Tecnalia

-

- (Spain) has been working toward factory automation project at Airbus, taking advantage of the dexterity and the ability for collaboration with humans that dual-arm robots provide. Their analysis and the work is reported in an academic paper[1].

Thank you all who have given us a support especially Kawada Industries and Kawada Robotics. Your understanding and generosity nourishes the opensource robotics.

(Photo: at 4th ROS Japan User Group meetup. Since NEXTAGE OPEN software comes with ROS-based simulation as well, whoever interested can give it a try, bring about meetup sessions like this using it.

TORK speaks about opensource robotics research and business at RSJ Seminar (09/11/2015)

Two of the board members at TORK will speak at a day-long seminar entitled “Opensource Software in Robotics, and its real- world examples” hosted by RSJ (Robotics Society of Japan) in September, 2015.

The following is some of the info cited from its official web page [Link to RSJ top page](where registration can be made as well). Reportedly watching the talks online-realtime will be possible on a request-basis.

- Seminar Language: Japanese

- Date: 9/11/2015 F 9:50-16:40 (entrance 9:20)

- Remote: Paid webinar style will be available. Registration opens in early August.

- Venue: Chuo University, Korakuen campus Bld.5 Rm.5134 (1-13-27 Kasuga, Bunkyo-ku, Tokyo)

- Speakers (translation by TORK)

- #1 10:00-11:00 Kei Okada (U. Tokyo) Current and the Future of Opensource Robotics Software in Research

- #2 11:10-12:10 Yoshitaka Hara (CIT) ROS-based autonomous robot for outdoor pedestrian space

- #3 13:10-14:10 Koichi Nagashima (Kawada Robotics Inc.) Humanoid research robot

- #4 14:20-15:20 Isaac I.Y. Saito (TORK) Facilitation of Opensource Robotics Software in Industry, and its future

- #5 15:30-16:30 Minoru Yamauchi (Toyota Motors Corporation) Toyota Partner Robot

We haven’t found English information for this session yet. If interested, we (TORK) might be able to provide assistance, so drop in a line at info[a-t]opensource-robotics.tokyo.jp

TORK Adds Another ROS wiki Mirror

As we mentioned a few months ago, mirrored web sites for ROS documents are in much need, not just when the original web site isn’t accessible but for a number of other reasons.

In ros-users forum there was recently an update announcement about ROS wiki mirroring status, and improved maintenance method for mirrors. On or before that announcement, TORK started mirroring wiki and api docs as well, which is also now noted in the list of mirrors on wiki.ros.org.

Needless to mention that this list of mirror sites aren’t accessible when the web site is down too…so I’ve updated a “mirror” of the list of mirrors.

Mirror sites of wiki.ros.org

According to status.ros.org, the wiki pages of ROS (wiki.ros.org) has been busy these days handling more than 60 requests every day every minute every day on average (seen on 2/20/2014). Just in case the web server may want to take a break for a moment, there are mirrors all over the world. The URLs are listed on this blog.

Announcing denso, a ROS I/F for industrial arms from Denso

From ros-users.

We’re happy to announce denso, a ROS/MoveIt! interface for industrial manipulators from Denso Wave Inc.

Key factors:

- Currently works with VS-060, vertical multi-joint robot from Denso.

- ROS communicates using UDP-based standardized protocol (ORiN) to the embedded controller computer that has been achieving industry-proven reliability. It also has mechanism to detect faulty commands. That said as a whole the system maintains the same level of safeness with their commercial product setting.

- However ROS interface is still experimental and feedback is highly appreciated. Please try out manipulation in RViz without the real robot.

- Work done by JSK lab at U-Tokyo. Maintenance by Tokyo Opensource Robotics Kyokai Association

Lastly, credit goes to Denso who provides the robot’s model to the opensource community.

Kei Okada, Ryohei Ueda

1/5/2014) Package is renamed to denso from densowave.

Announcement for starting support service of ROS

Tokyo Opensource Robotics Kyokai Association (TORK) was established in August 2013 as an incorporated association aimed to form and develop the robotics discipline based in open source software. Until today, we have been supporting user communities of the open source software such as OpenRTM and ROS, etc. This time, we would like to announce that, from January 2014, we will be inviting corporate members, and at the same time, will start the support services for the corporate members to specifically help them solve various problems related to ROS implementations.